Published

In Defence of Machines: Why European Proposals to Regulate AI Per Se Involve a Category Error and Would Prove Harmful

By: Guest Author

Subjects: Digital Economy European Union

Brian Williamson, a Partner at consultancy Communications Chambers. The views in this blog are his own.

The European Commission published a proposed legal framework for regulation of Artificial Intelligence (AI) in April 2021. Extensive regulation is proposed which would prohibit some applications and require prior approval for others, based on an a priori view of risk for classes of applications.

AI is a general-purpose technology like steam, electricity and computing that came before it, namely it will likely have application across all areas of human endeavour. As Marc Andreessen said a decade ago ‘software is eating the world’, and AI is software.

The fact that AI is a general-purpose technology should give us pause for thought about whether, and what it would mean, to regulate AI. We did not regulate steam or computers per se, though we did regulate aspects of railway services, for example, which utilised steam and later utilised computers.

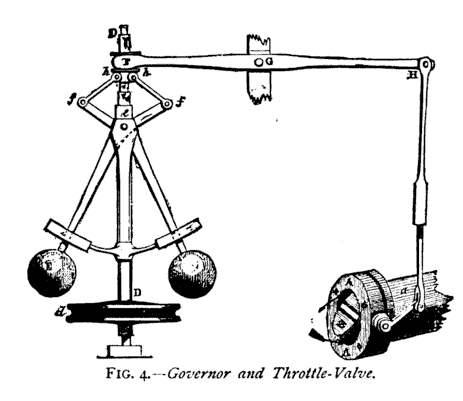

There is also perhaps a belief that AI is fundamentally different because AI systems may operate with a degree of autonomy. But this is hardly new, as the example of a mechanical speed governor for steam engines illustrates.

The example of a mechanical governor is also interesting because it embedded an autonomous decision-making system in what was arguably a high-risk system, and it would arguably not have been wise to design the governor to allow a human operator to override it.

A tenet of the proposed European approach is that it should be technology neutral. Yet the focus on ‘artificial’ in relation to intelligence is hardly neutral. We want to use AI wherever and whenever it is either more efficient or effective (say safer or less biased) to use it as a substitute or complement in relation to human tasks. Further, in some instances new possibilities open up since AI can perform previously prohibitively expensive applications, for example, mass-market real-time language translation.

There are two ways of ensuring the desired neutrality in order to maximise the potential productivity and other societal gains from AI, apply existing rules (intelligently) to systems that incorporate AI; or should an application of AI prompt a rethink of existing rules aim to frame the new rule in a technology neutral manner. The bar should be the same whether AI is an input to production or not.

If we fail to follow a neutral approach, we risk foregoing opportunities for productivity, the fundamental driver of income and leisure, growth; or curtailing opportunities to enhance EU values and fundamental rights. The risks associated with AI are not in general risks of AI per se, but risks associated with activities into which AI may be incorporated, and where existing human decisions and decision-making institutions are known to involve risks. The proposed approach is not therefore risk based, since it application could perpetuate higher risk approaches than alternatives incorporating AI.

The proposed European regulation seeks to address the: ‘opacity, complexity, bias, a certain degree of unpredictability and partially autonomous behaviour of certain AI systems’. Yet human decision making suffers from these characteristics alongside others that are harmful, in particular human decisions are noisy (they vary for the same inputs, even for the same individual) and at times they are corruptible, namely exhibit bias in return for reward. AI is arguably far more transparent than human decision makers and much more readily ‘interrogated’ to see how it responds in a range of situations including so called edge cases.

The European approach also seeks to be future proof, yet if it does not tackle human intelligence and its failings alongside artificial intelligence and its failings it cannot be future proof. It is inevitable, where machines can perform with say greater safety or less bias than humans and human institutions that some will call for humans to be prohibited from making specific decisions.

The bias against judicious reliance on machine intelligence is further exacerbated by Article 14(4) which proposes the ability to disregard or shut down what is referred to as a high-risk AI system (but is in fact an activity that may be high risk and incorporates AI). Specifically, it is proposed that individuals be able to “intervene on the operation of the high-risk AI system or interrupt the system through a “stop” button or a similar procedure.”

Not only might allowing such intervention make a high-risk system more risky overall, the regulation would also discourage the development of AI safety systems that could go beyond human capabilities given that safety would have to be acceptable if the AI system were interrupted.

A number of AI systems are categorised as high-risk under regulations, for example, Recital 37 refers to ‘AI systems used to evaluate the creditworthiness of persons should be classified as high-risk…’. But surely implies, if valid, that ‘systems’, whether AI or not, used to evaluate creditworthiness should be classified as high risk?

Removing the ‘AI’ from ‘AI systems’ for each category of high-risk would provide a sense check on what is proposed (would you apply the proposed regulations to other systems?) and a prompt to consider the adequacy of existing regulation in relation to systems including AI systems. Indeed, the US Federal Trade Commission, in an April 2021 paper on regulation of AI, make precisely this point in relation to creditworthiness:

“Whether caused by a biased algorithm or by human misconduct of the more prosaic variety, the FTC takes allegations of credit discrimination very seriously, as its recent action against Bronx Honda demonstrates.”

The proposed regulation would mandate an innovation only with permission culture for a wide range of activities, yet much (though not all) innovation and adoption of technology is driven by decentralised decisions and competing ideas and entities. The compliance costs involved with the proposed approach would appear to preclude individuals and small teams from developing and marketing applications in many fields, yet we know this was an engine of innovation and growth for the app economy.

The counterpart of this disincentive for decentralised and often small-scale innovation is an incentive for larger enterprises to take a more dominant role and also for platforms focus on integrated AI innovation rather than offering open APIs for third parties. Finally, the approach would act as a barrier to trade with the rest of the world, and openness to trade and ideas is known to stimulate local innovation.

The proposed European approach to regulation of AI is, in contrast, top down with a range of applications of AI either banned or requiring permission before they can be offered with the European Union. Further, Article 43(4) states that: “High-risk AI systems shall undergo a new conformity assessment procedure whenever they are substantially modified, regardless of whether the modified system is intended to be further distributed or continues to be used by the current user.”

There remains an opportunity to translate the ambition beyond the proposed regulation of AI into an initiative to re-examine existing regulation through the lens of what AI may make possible, to ask whether the existing regulation needs reinterpretation to apply to systems incorporate AI or not, or whether a more – not less – technology neutral approach to promoting the European economy, values and fundamental rights can be adopted via an approach which is neutral between the best of what machines and humans have to offer. Taking on that challenge truly would be a contribution to a “Union that strives for more”.